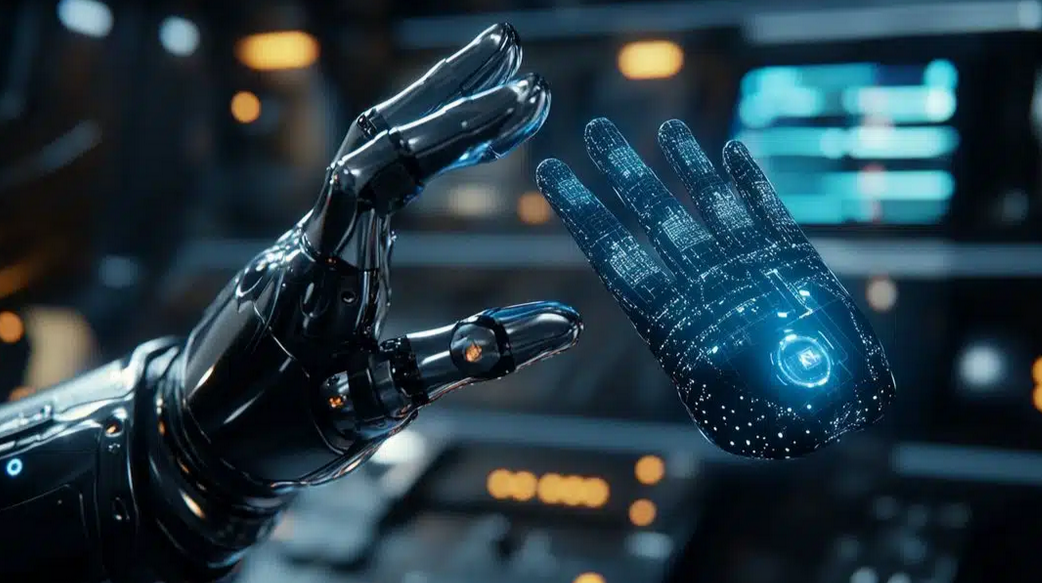

Meta, the parent company of Facebook and Instagram, has set a new benchmark in robotics with its Fundamental AI Research (FAIR) team. Their latest initiative aims to give robots the human-like ability to feel, comprehend, and manipulate objects through touch. By simulating tactile sensations, these AI-driven robots can interact more intelligently with their environments, including safely coexisting with humans.

The ability for robots to perceive touch is a major step toward advanced machine intelligence (AMI). Meta’s FAIR researchers recently highlighted this capability alongside innovations like NotebookLlama, demonstrating how tactile AI can enhance not just robotics, but also applications in manufacturing, healthcare, and beyond.

Innovations in Robotic Touch: Sparsh and Meta Digit 360

Meta FAIR has introduced groundbreaking technologies designed to provide robots with sophisticated tactile abilities. Among these is Sparsh, a general-purpose encoder capable of integrating sensory inputs from multiple touchpoints and sensors. Sparsh allows AI systems to analyze tactile data from a vision and touch perspective simultaneously, giving robots the ability to “understand” the objects they handle.

Another key innovation is Meta Digit 360, a tactile fingertip device equipped with highly advanced sensory technology. The AI embedded in this device can determine the exact pressure needed to lift, spin, or manipulate objects while also identifying the properties of each item. This capability closely mimics human dexterity, making robots far more adaptive in complex tasks.

By combining Sparsh and Meta Digit 360, robots gain the ability to handle delicate or intricate tasks with precision, which was previously limited to human operators.

Transferring Touch: From Palm to Fingertips

One of the most intriguing challenges Meta FAIR addressed is replicating the way humans transfer touch information from fingers to the brain. The Meta team worked on the Digit Plexus platform, a standard hardware-software framework developed in partnership with Wonik Robotics. This platform integrates touch sensors in multiple configurations across a single robotic hand, mimicking how humans detect texture, pressure, and temperature.

In a detailed FAIR blog post, Meta explained that human hands communicate complex tactile information, such as sensing if a cup of coffee is too hot or determining the force needed to swipe a smartphone screen. Replicating this mechanism in robotic systems allows machines to “feel” in a human-like way.

Through the Digit Plexus platform, AI-driven robotic hands can process this tactile input in real-time, adjusting grip strength and motion depending on the object’s size, weight, and fragility. This creates a safer and more effective interaction between humans and robots in both domestic and industrial environments.

Applications of Tactile AI in Industry and Daily Life

The potential applications for Meta’s tactile AI research are vast. By equipping robots with human-like touch, industries can achieve higher efficiency and safety in tasks that require delicate handling or dexterous manipulation.

Manufacturing:

Robots with tactile intelligence can handle fragile items like glass, electronics, and medical devices, reducing breakage and improving production quality. They can also perform complex assembly tasks, such as wiring or intricate mechanical operations, with precision. This reduces human labor costs and enhances overall manufacturing efficiency.

Healthcare:

In medical settings, tactile AI can transform patient care. Robots capable of sensing touch could assist with surgeries, rehabilitation, or caregiving, ensuring precise force application when handling patients or medical instruments. This reduces human error and enhances patient safety.

Human-Robot Interaction:

By mimicking human touch, robots can interact more naturally and safely with humans. For example, robots could hand objects to workers or assist elderly individuals without causing harm. This represents a significant step toward seamless human-robot collaboration in daily life.

Advanced Machine Intelligence and the Future

Meta’s initiative reflects a broader ambition in advanced machine intelligence (AMI). By developing tactile perception, robots move closer to human-level comprehension of their environment. AI systems that can sense touch, interpret data, and act autonomously are crucial for fully autonomous robotics in the future.

The combination of hardware (Digit 360 and Digit Plexus) and AI (Sparsh) allows machines not just to react but to understand context. For instance, a robot can discern the difference between a delicate teacup and a heavy tool, adjusting its grip accordingly. These advancements are key to enabling robots to perform more intricate tasks without constant human supervision.

Challenges and Next Steps

Despite these breakthroughs, challenges remain. Integrating tactile AI into scalable robotic systems requires precision manufacturing, robust sensor calibration, and seamless AI software integration. FAIR is actively researching ways to overcome these challenges while ensuring that robots can operate safely alongside humans.

Meta emphasizes ethical AI usage, particularly in environments where robots interact with vulnerable populations, such as hospitals or homes. Ensuring that AI systems respond predictably to touch stimuli without unintended consequences is a top priority.

The Bigger Picture

Meta FAIR’s tactile AI research is not just about building better robots; it is about reimagining the relationship between humans and machines. By equipping robots with the sense of touch, AI becomes more intuitive, efficient, and responsive. This opens new avenues in industries, healthcare, and even daily life, where robots can augment human capabilities rather than replace them.

In the coming years, we can expect these innovations to impact fields ranging from industrial automation to personal robotics, surgical assistance, and logistics. Robots that can “feel” will be able to perform tasks that were previously impossible for AI, creating safer and more efficient interactions in a wide range of settings.

Conclusion

Meta’s FAIR team is pushing the boundaries of what AI-powered robots can achieve by giving them human-like tactile abilities. Through innovations like Sparsh, Meta Digit 360, and the Digit Plexus platform, robots can sense, understand, and manipulate objects with unprecedented precision.

This research has the potential to transform industries, improve healthcare, and enhance human-robot interaction, all while advancing the frontier of advanced machine intelligence. As these technologies continue to develop, the line between human and robotic capabilities will continue to blur, creating a future where AI-driven machines can safely and intelligently coexist with humans.