In 2024, generative AI saw remarkable growth, with powerful multimodal tools like GPT-4o, Claude 3.5 Sonnet, and Grok entering the market. These AI products offered a wide range of solutions for users, from creative writing and coding to multimedia production. However, the rapid pace of development has raised concerns that responsible AI practices may be overlooked in favor of innovation and profit.

Jan Leike, a former OpenAI researcher, left the company last year, citing worries about the organization’s safety culture and internal processes. With the AI industry projected to reach $250 billion by 2025, there is a growing risk that companies may prioritize speed and market dominance over ethical and safe development.

The Challenge of Responsible AI Development

While many researchers emphasize ethical AI, the larger tech industry often sidelines these principles. For example, Google has faced criticism for using copyrighted materials in AI training without permission or compensation. The company also offers AI-generated answers in its search engine but only provides a vague disclaimer that the content is “experimental.” This lack of clarity may lead users to assume the outputs are entirely accurate.

Bias in AI is another pressing issue. Google’s Gemini model has been criticized for biased representations, such as depicting historical figures inaccurately. These examples highlight the need for responsible AI development that prioritizes fairness, accuracy, and transparency, rather than focusing solely on innovation and revenue.

Generative AI and Hallucinations

One of the major risks of generative AI is the creation of “hallucinations”—plausible but false outputs. In 2023, two New York lawyers were sanctioned for submitting AI-generated fictitious cases in court. In November 2024, Google Gemini reportedly offended a user, showing how AI can unintentionally cause harm.

Addressing these risks requires raising awareness about the limitations of large language models (LLMs). While companies like OpenAI provide warnings about potential errors, users often underestimate how common hallucinations can be. Some companies, such as Anthropic, have taken proactive steps to minimize these risks, publishing reports to ensure models like Claude do not mislead users.

Deepfakes and Misinformation

Another growing concern is the rise of deepfakes. In 2024, text-to-image, text-to-video, and text-to-voice technologies made it easier than ever to produce realistic but entirely synthetic content. Public figures, including Donald Trump, Joe Biden, Kamala Harris, and Taylor Swift, were featured in deepfakes across social media platforms.

The widespread availability of deepfakes has created uncertainty, as users struggle to distinguish real content from fake. Criminals have exploited these technologies as well—one finance worker was deceived into transferring $25 million to fraudsters using a deepfake impersonating an executive.

While tools like Runway and ChatGPT use watermarks to differentiate real and synthetic content, these protections can often be bypassed, emphasizing the need for robust moderation and stricter controls.

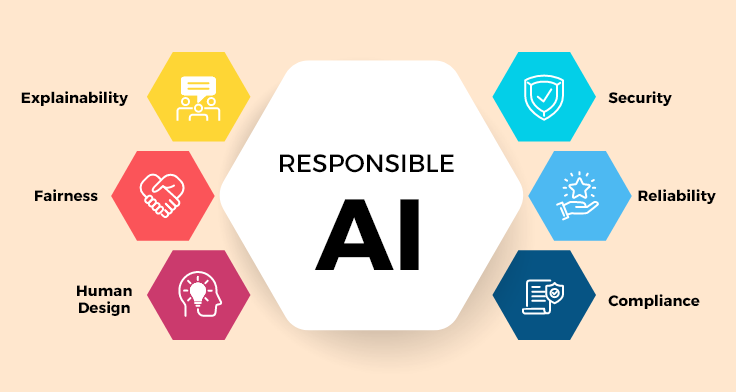

Advocating for Responsible AI

The rapid growth of AI makes responsible development critical. Key areas to focus on include:

-

Transparency: Clearly communicating AI limitations and the possibility of hallucinations.

-

Ethical Practices: Using diverse, unbiased, and legally sourced datasets for training.

-

Safety Measures: Implementing safeguards against misuse, such as deepfake detection and content moderation.

-

User Education: Encouraging fact-checking and careful review of AI outputs.

Google CEO Sundar Pichai has committed to improving Gemini after users raised concerns, highlighting the importance of corporate accountability. Industry experts, like Juan Jose Lopez Murphy from Globant, stress the need for proactive discussions about algorithmic bias, ethical development, and transparency.

Balancing Innovation and Risk

Many AI companies focus on releasing cutting-edge features and achieving market dominance, sometimes at the expense of safety and ethics. However, implementing safeguards can allow innovation to continue while reducing risks. Responsible AI development ensures that the technology enhances productivity and creativity without causing harm.

Regulation and collaboration among developers, policymakers, and researchers can further support ethical AI practices. Public pressure and informed users also play a crucial role in holding companies accountable.

Looking Ahead to 2025

As AI continues to transform industries such as education, healthcare, media, and finance, responsible development becomes increasingly critical. Developers must design systems that are both innovative and safe, while users need to approach AI outputs with caution and verification.

The lessons of 2024—from deepfakes to biased and hallucinated outputs—highlight the urgent need for robust ethical guidelines, user education, and proactive oversight. AI has immense potential to improve human capabilities, but without careful management, it could also spread misinformation and bias, eroding trust.

Conclusion

Responsible AI development remains a complex but essential challenge. The events of 2024 demonstrate that unchecked innovation can lead to ethical and safety concerns, but careful regulation, transparency, and education can help mitigate these risks.

As 2025 approaches, developers, regulators, and users must work together to ensure AI is used responsibly. By prioritizing ethics, transparency, and user awareness, AI can serve as a powerful tool for positive impact, enhancing human capabilities while minimizing harm. The future of AI depends not only on technological innovation but also on our commitment to using it responsibly.